![]()

Nvidia Ups Ante in AI Chip Game with New Blackwell Architecture

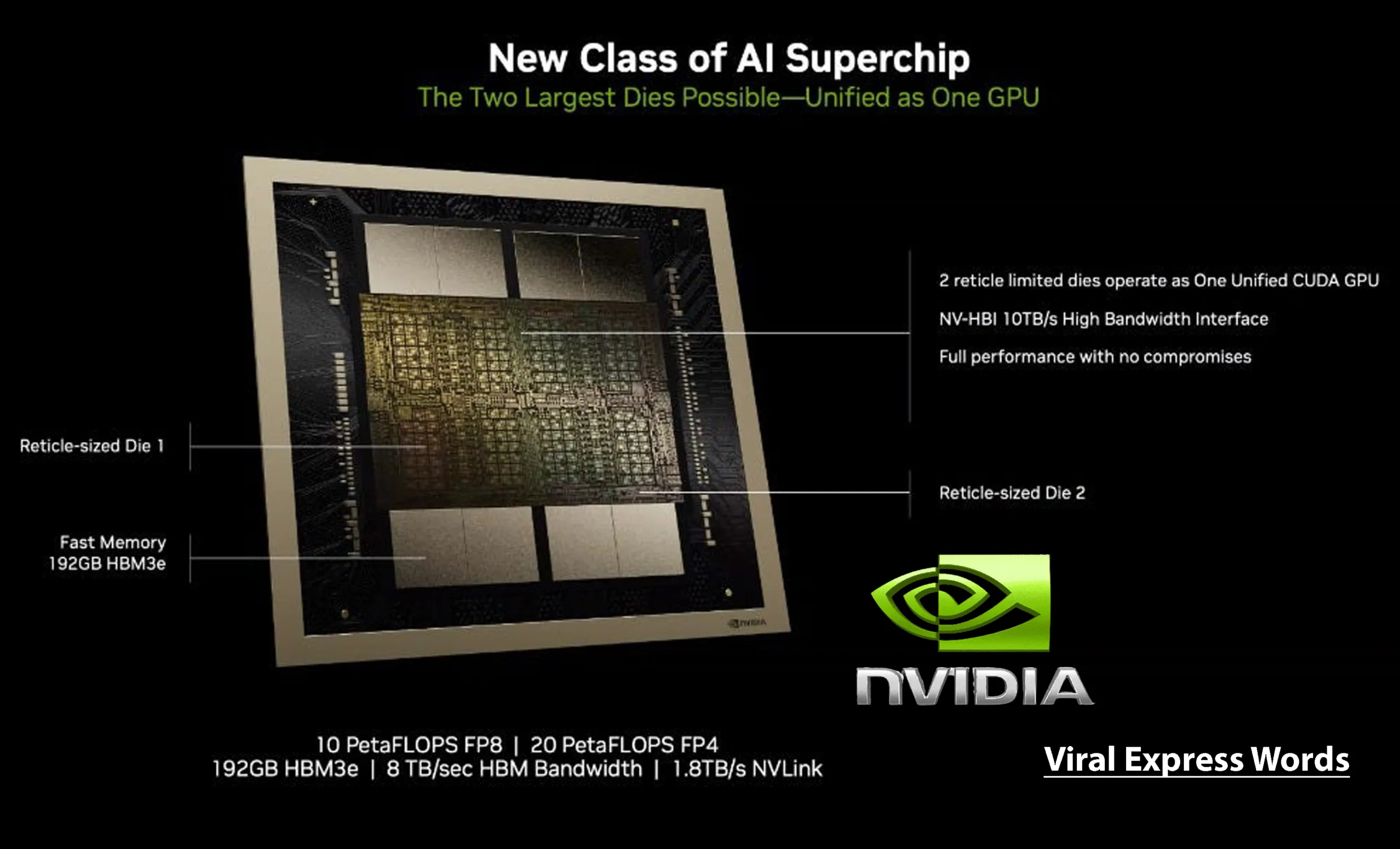

Nvidia is pumping up the power in its line of artificial intelligence chips with the announcement Monday of its Blackwell GPU architecture at its first in-person GPU Technology Conference (GTC) in five years. According to Nvidia, the chip, designed for use in large data centers — the kind that power the likes of AWS, Azure, and Google offers 20 Petaflops of AI performance which is 4x faster on AI-training workloads, 30x faster on AI-inferencing workloads and up to 25x more power efficient than its predecessor.

Nvidia’s New Blackwell Chip: A Leap Forward in Power and Efficiency

Compared to its predecessor, the H100 “Hopper,” the B200 Blackwell is both more powerful and energy efficient, Nvidia maintained. To train an AI model the size of GPT-4, for example, would take 8,000 H100 chips and 15 megawatts of power. That same task would take only 2,000 B200 chips and four megawatts of power. “This is the company’s first big advance in chip design since the debut of the Hopper architecture two years ago,” Bob O’Donnell, founder and chief analyst of Technalysis Research wrote in his weekly LinkedIn newsletter.

Repackaging Exercise

However, Sebastien Jean, CTO of Philson Electronics Taiwanese electronics company, called the chip “a repackaging exercise.”

1. It’s good, but it’s not groundbreaking,” he told TechNews World. “It will run faster, use less power, and allow more compute into a smaller area, but from a technologist perspective, they just squished it smaller without really changing anything fundamental.”

2. “That means that their results are easily replicated by their competitors,” he said. “Though there is value in being first because while your competition catches up, you move on to the next thing.”

3. “When you force your competition into a permanent catch-up game, unless they have very strong leadership, they will fall into a ‘fast follower’ mentality without realizing it,” he said.

4. “By being aggressive and being first,” he continued, “Nvidia can cement the idea that they are the only true innovators, which drives further demand for their products.”

5. Although Blackwell may be a repackaging exercise, he added, it has a real net benefit. “In practical terms, people using Blackwell will be able to do more compute faster for the same power and space budget,” he noted. “That will allow solutions based on Blackwell to outpace and outperform their competition.”

Plug-Compatible With Past

O’Donnell highlighted the Blackwell architecture’s second-generation transformer engine, which significantly reduces AI floating point calculations from eight bits to four bits, doubling compute performance and model sizes.

Seamless Integration: Nvidia’s New Blackwell Microchip Compatible with Predecessors

The new chips are also compatible with their predecessors. “If you already have Nvidia’s systems with the H100, Blackwell is plug-compatible,” observed Jack E. Gold, founder and principal analyst with Gold Associates an IT advisory company in Northborough, Mass.

| For more information: Nvidia Ups Ante in AI Chip Game with New Blackwell Architecture (technewsworld.com) |

Nvidia’s Blackwell Chips and Inference Microservices Unveiled at GTC

Gold added that the Blackwell chips could help developers produce better AI applications. “The more data points you can analyze, the better the AI gets,” he explained. “What Nvidia is talking about with Blackwell is instead of being able to analyze billions of data points, you can analyze trillions.”

Also announced at the GTC were Nvidia Inference Microservices (NIM). Equity strategist Brian Colello suggests that Nvidia’s CUDA platform based NVM tools can help businesses integrate custom applications and AI models into production environments, potentially introducing new AI products to the market.

Helping Deploy AI

Shane Rau, a semiconductor analyst with Idica, suggests that while big companies can quickly adopt and deploy new technologies, small and medium businesses often lack the resources to buy, customize, and deploy these technologies. NIM systems can help these businesses adopt and deploy new technologies more easily.

“With NIM, you’ll find models specific to what you want to do,” he told Viral Express Words. “Not everyone wants to do AI in general. They want to do AI that’s specifically relevant to their company or enterprise.”

NIM, while not as exciting as the latest hardware designs, is crucial in the long run for several reasons. The initiative aims to expedite and streamline the transition from GenAI experiments to real-world production for companies lacking sufficient data scientists and GENAI programming experts. As a result, it’s great to see Nvidia helping ease this process.”

The new microservices by Nvidia can create a new revenue stream and business strategy by licensing them on a per GPU/per hour basis, potentially providing a long-lasting and more diversified means of generating income. Despite its early days, this is an important area to monitor.

Entrenched Leader

Rau predicts Nvidia will remain the preferred AI processing platform in the future, while AMD and Intel may gain a small portion of the GPU market. The growing competition for market shares due to different AI chips like microprocessors, FPGAs, and ASICs will continue.

1. Abdullah Anwer Ahmed, founder of Serene Data Ops, asserted that Nvidia’s market dominance is highly secure and poses minimal threats.

2. A source reports that the company’s CUDA software solution and superior hardware have been the foundation of AI segments for over a decade.

3. The primary threat is that Amazon, Google, and Microsoft/OpenAI are developing their own chips that are optimized around these models. “Google already has their ‘TPU’ chip in production. Amazon and OpenAI have hinted at similar projects.

4. An any case, building one’s own GPUs is an option only available to the absolute largest companies,” he added. “Most of the LLM industry will continue to buy Nvidia GPUs.

Conclusion: Nvidia’s Blackwell Chip Redefines AI Chip Technology

Nvidia’s introduction of the Blackwell GPU architecture was a major turning point in the advancement of AI chip technology. Blackwell is set to revolutionize server farms with its 20 Petaflops of AI capability and energy productivity gains. Despite criticism, Blackwell’s advancements enable faster computations with reduced power consumption, driving innovation in AI applications.

Furthermore, Blackwell’s seamless integration with existing Nvidia systems ensures compatibility and facilitates easy adoption for developers. The introduction of Nvidia Inference Microservices (NIM) further enhances deployment capabilities, empowering businesses to efficiently leverage custom AI applications and pretrained models.

FAQ’s

What is Nvidia’s Blackwell Chip?

A groundbreaking GPU architectural approach designed specifically for applications involving artificial consciousness (simulated intelligence) is Nvidia’s Blackwell Chip. It is perfect for usage in big data centers since it performs better than prior generations in terms of energy efficiency and performance.

What are the key features of the Blackwell Chip?

The Blackwell Chip shows 20 Petaflops of performance gain in AI-training activities and 30 times performance gain in AI-inferencing tasks compared to its predecessor. Its energy efficiency has also increased by a factor of 25.

How does the Blackwell Chip compare to its predecessor, the H100 “Hopper”?

Compared to the H100 “Hopper,” the Blackwell Chip offers significant improvements in both power and efficiency. The training of an AI model of GPT-4 requires significantly more power and chips than the task at hand.

Is the Blackwell Chip compatible with existing Nvidia systems?

Yes, the Blackwell Chip is designed to be plug-compatible with its predecessors. This means that businesses with Nvidia’s H100 systems can easily upgrade to Blackwell chips without requiring significant modifications.

What are Nvidia Inference Microservices (NIM), and how do they relate to the Blackwell Chip?

Nvidia Inference Microservices (NIM) are CUDA platform solutions that enhance CPU deployment capabilities by allowing enterprises to integrate bespoke applications and AI models into production settings.

What advantages does the Blackwell Chip offer for developers and businesses?

The Blackwell Chip enables developers to produce better AI applications by providing increased computational power and efficiency. With the ability to analyze trillions of data points, Blackwell facilitates faster and more accurate AI processing, leading to improved performance in various applications.

Add Comment